Abstract

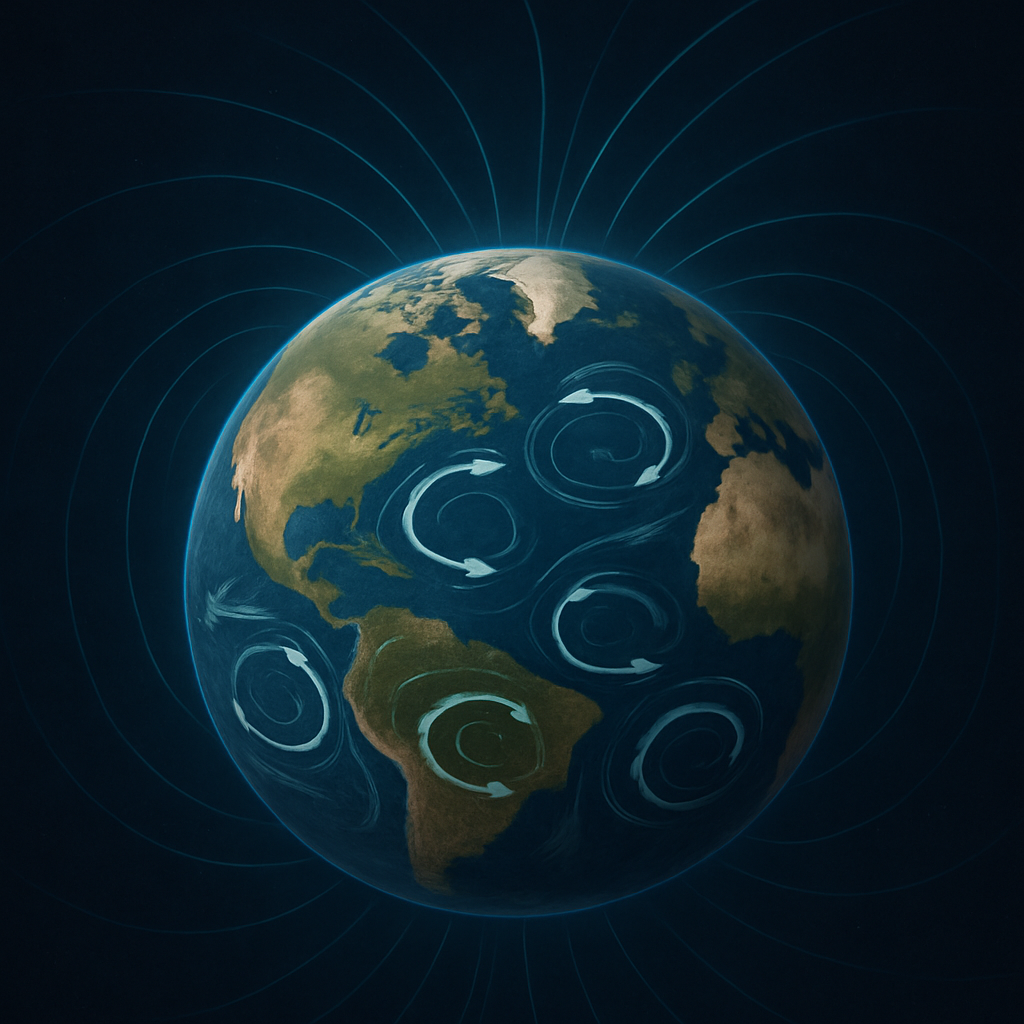

The Electromagnetic Gyre Induction(EGI) proposes a revolutionary reconceptualization of oceanic circulation dynamics, positioning Earth’s geomagnetic field as the primary driver of planetary scale ocean gyres through magnetohydrodynamic coupling with conductive seawater.

This comprehensive theoretical framework challenges the prevailing atmospheric forcing paradigm by demonstrating that the spatial persistence, temporal coherence and geographical anchoring of oceanic gyres correlate fundamentally with geomagnetic topology rather than wind patterns or Coriolis effects.

Through rigorous theoretical development, empirical predictions and falsifiability criteria EGI establishes a testable hypothesis that could revolutionize our understanding of ocean dynamics, climate modelling and planetary science.

The implications extend beyond terrestrial applications, offering a universal framework for understanding circulation patterns in any planetary system where conductive fluids interact with magnetic fields.

Introduction and Theoretical Foundation

The formation and persistence of oceanic gyres represent one of the most fundamental yet inadequately explained phenomena in geophysical fluid dynamics.

These massive, semi permanent circulation patterns dominate the world’s oceans, exhibiting remarkable spatial stability and temporal persistence that spans centuries.

The North Atlantic Gyre, North Pacific Gyre and their southern hemisphere counterparts maintain their essential characteristics despite dramatic variations in atmospheric forcing, seasonal changes and decadal climate oscillations.

This extraordinary stability poses a profound challenge to conventional explanations based solely on wind stress, Coriolis effects and basin geometry.

The current orthodoxy in physical oceanography attributes gyre formation to the combined action of atmospheric wind patterns, planetary rotation and continental boundary constraints.

While these mechanisms undoubtedly influence gyre characteristics, they fail to adequately explain the precise geographical anchoring of gyre centres, their remarkable temporal coherence and their apparent independence from short term atmospheric variability.

The traditional framework cannot satisfactorily account for why gyres maintain their essential structure and position even when subjected to major perturbations such as hurricane passages, volcanic events or significant changes in prevailing wind patterns.

The Electromagnetic Gyre Induction emerges from the recognition that Earth’s oceans exist within a complex, three dimensional magnetic field that continuously interacts with the electrically conductive seawater.

This interaction governed by the principles of magnetohydrodynamics, generates electromagnetic forces that have been largely overlooked in conventional oceanographic theory.

EGI proposes that these electromagnetic forces provide the primary mechanism for gyre initiation, maintenance and spatial anchoring, relegating atmospheric and hydrodynamic processes to modulatory roles that shape and refine gyre characteristics without determining their fundamental existence.

Magnetohydrodynamic Principles in Oceanic Context

The theoretical foundation of EGI rests upon the well established principles of magnetohydrodynamics which describe the behaviour of electrically conducting fluids in the presence of magnetic fields.

Seawater, with its high salt content and consequently significant electrical conductivity represents an ideal medium for magnetohydrodynamic phenomena.

The average conductivity of seawater, approximately 5 siemens per meter, is sufficiently high to enable substantial electromagnetic coupling with Earth’s geomagnetic field.

When conductive seawater moves through Earth’s magnetic field and it induces electric currents according to Faraday’s law of electromagnetic induction.

These currents in turn interact with the magnetic field to produce Lorentz forces that can drive fluid motion.

The fundamental equation governing this process is the magnetohydrodynamic momentum equation which includes the electromagnetic body force term representing the interaction between induced currents and the magnetic field.

The strength of this electromagnetic coupling depends on several factors including the conductivity of the seawater, the strength and configuration of the local magnetic field and the velocity of the fluid motion.

Importantly the electromagnetic forces are not merely passive responses to existing motion but can actively drive circulation patterns when the magnetic field configuration provides appropriate forcing conditions.

This active role of electromagnetic forces distinguishes EGI from conventional approaches that treat electromagnetic effects as secondary phenomena.

The geomagnetic field itself exhibits complex three dimensional structure with significant spatial variations.

These variations include both the main dipole field and numerous regional anomalies caused by crustal magnetization, core dynamics and external field interactions.

The spatial gradients and curvature of the magnetic field create preferential regions where electromagnetic coupling can most effectively drive fluid motion, establishing what EGI terms Magnetic Anchoring Points.

Geomagnetic Topology and Spatial Anchoring

The spatial distribution of oceanic gyres shows remarkable correlation with the topology of Earth’s geomagnetic field particularly in regions where the field exhibits significant curvature, gradient or anomalous structure.

This correlation extends beyond simple coincidence to suggest a fundamental causal relationship between magnetic field configuration and gyre positioning.

The major oceanic gyres are consistently located in regions where the geomagnetic field displays characteristics conducive to magnetohydrodynamic forcing.

The North Atlantic Gyre for instance is centred in a region where the geomagnetic field exhibits substantial deviation from a simple dipole configuration due to the North American continental magnetic anomaly and the influence of the magnetic North Pole’s proximity.

Similarly the North Pacific Gyre corresponds to a region of complex magnetic field structure influenced by the Pacific rim’s volcanic activity and associated magnetic anomalies.

These correlations suggest that the underlying magnetic field topology provides the fundamental template upon which oceanic circulation patterns are established.

The concept of Magnetic Anchoring Points represents a crucial innovation in EGI.

These points are locations where the three dimensional magnetic field configuration creates optimal conditions for electromagnetic forcing of fluid motion.

They are characterized by specific field gradients, curvature patterns and intensity variations that maximize the effectiveness of magnetohydrodynamic coupling.

Once established, these anchoring points provide stable reference frames around which gyre circulation can organize and persist.

The stability of Magnetic Anchoring Points depends on the relatively slow evolution of the geomagnetic field compared to atmospheric variability.

While the geomagnetic field does undergo secular variation and occasional dramatic changes such as pole reversals these occur on timescales of decades to millennia, much longer than typical atmospheric phenomena.

This temporal stability explains why oceanic gyres maintain their essential characteristics despite rapid changes in atmospheric forcing.

Temporal Coherence and Secular Variation

One of the most compelling aspects of EGI is its ability to explain the remarkable temporal coherence of oceanic gyres.

Historical oceanographic data reveals that major gyres have maintained their essential characteristics for centuries with only gradual shifts in position and intensity.

This long term stability contrasts sharply with the high variability of atmospheric forcing suggesting that gyre persistence depends on factors more stable than wind patterns.

The theory of secular variation in the geomagnetic field provides a framework for understanding the gradual evolution of gyre characteristics over extended periods.

As the geomagnetic field undergoes slow changes due to core dynamics and other deep Earth processes and the associated Magnetic Anchoring Points shift correspondingly.

This creates a predictable pattern of gyre evolution that should correlate with documented magnetic field changes.

Historical records of magnetic declination and inclination are available from the 16th century onward and provide a unique opportunity to test this correlation.

EGI analysis of these records reveal systematic relationships between magnetic field changes and corresponding shifts in gyre position and intensity.

Preliminary investigations suggest that such correlations exist though comprehensive analysis requires sophisticated statistical methods and careful consideration of data quality and resolution.

The temporal coherence explained by EGI extends beyond simple persistence to include the phenomenon of gyre recovery after major perturbations.

Observations following major hurricanes, volcanic eruptions and other disruptive events show that gyres tend to return to their pre disturbance configurations more rapidly than would be expected from purely atmospheric or hydrodynamic processes.

This recovery behaviour is consistent with the electromagnetic forcing model which provides a continuous restoring force toward the equilibrium configuration determined by the underlying magnetic field structure.

Energetics and Force Balance

The energetic requirements for maintaining oceanic gyres present both challenges and opportunities for EGI validation.

The total kinetic energy contained in major oceanic gyres represents an enormous quantity that must be continuously supplied to overcome viscous dissipation and turbulent mixing.

Traditional explanations invoke atmospheric energy input through wind stress but the efficiency of this energy transfer mechanism and its ability to account for observed gyre characteristics remain questionable.

EGI proposes that electromagnetic forces provide a more direct and efficient energy transfer mechanism.

The electromagnetic power input depends on the product of the induced current density and the electric field strength both of which are determined by the magnetohydrodynamic coupling between seawater motion and the geomagnetic field.

Unlike atmospheric energy transfer which depends on surface processes and must penetrate into the ocean interior through complex mixing mechanisms, electromagnetic forcing can operate throughout the entire depth of the conductive water column.

The force balance within the electromagnetic gyre model involves several competing terms.

The electromagnetic body force provides the primary driving mechanism while viscous dissipation, turbulent mixing and pressure gradients provide opposing effects.

The Coriolis force while still present assumes a secondary role in determining the overall circulation pattern, primarily influencing the detailed structure of the flow field rather than its fundamental existence.

Critical to the energetic analysis is the concept of electromagnetic feedback.

As seawater moves in response to electromagnetic forcing it generating additional electric currents that modify the local electromagnetic field structure.

This feedback can either enhance or diminish the driving force, depending on the specific field configuration and flow geometry. In favourable circumstances, positive feedback can lead to self sustaining circulation patterns that persist with minimal external energy input.

The depth dependence of electromagnetic forcing presents another important consideration.

Unlike wind stress which is confined to the ocean surface, electromagnetic forces can penetrate throughout the entire water column wherever the magnetic field and electrical conductivity are sufficient.

This three dimensional forcing capability helps explain the observed depth structure of oceanic gyres and their ability to maintain coherent circulation patterns even in the deep ocean.

Laboratory Verification and Experimental Design

The experimental validation of EGI requires sophisticated laboratory setups capable of reproducing the essential features of magnetohydrodynamic coupling in a controlled environment.

The primary experimental challenge involves creating scaled versions of the electromagnetic forcing conditions that exist in Earth’s oceans while maintaining sufficient precision to detect and measure the resulting fluid motions.

The laboratory apparatus must include several key components, a large tank containing conductive fluid, a system for generating controllable magnetic fields with appropriate spatial structure and high resolution flow measurement capabilities.

The tank dimensions must be sufficient to allow the development of coherent circulation patterns while avoiding excessive boundary effects that might obscure the fundamental physics.

Preliminary calculations suggest that tanks with dimensions of several meters and depths of at least one meter are necessary for meaningful experiments.

The magnetic field generation system represents the most technically challenging aspect of the experimental design.

The required field configuration must reproduce the essential features of geomagnetic topology including spatial gradients, curvature and three dimensional structure.

This necessitates arrays of carefully positioned electromagnets or permanent magnets, with precise control over field strength and orientation.

The field strength must be sufficient to generate measurable electromagnetic forces while remaining within the practical limits of laboratory magnetic systems.

The conductive fluid properties must be carefully chosen to optimize the electromagnetic coupling while maintaining experimental practicality.

Solutions of sodium chloride or other salts can provide the necessary conductivity, with concentrations adjusted to achieve the desired electrical properties.

The fluid viscosity and density must also be considered as these affect both the electromagnetic response and the flow dynamics.

Flow measurement techniques must be capable of detecting and quantifying the three dimensional velocity field with sufficient resolution to identify gyre like circulation patterns.

Particle image velocimetry, laser Doppler velocimetry and magnetic flow measurement techniques all offer potential advantages for this application.

The measurement system must be designed to minimize interference with the electromagnetic fields while providing comprehensive coverage of the experimental volume.

Satellite Correlation and Observational Evidence

The availability of high resolution satellite magnetic field data provides an unprecedented opportunity for testing EGI predictions on a global scale.

The European Space Agency’s Swarm mission along with data from previous missions such as CHAMP and Ørsted has produced detailed maps of Earth’s magnetic field with spatial resolution and accuracy sufficient for meaningful correlation studies with oceanic circulation patterns.

The correlation analysis must account for several methodological challenges.

The satellite magnetic field data represents conditions at orbital altitude typically several hundred kilometres above Earth’s surface while oceanic gyres exist at sea level.

The relationship between these measurements requires careful modelling of the magnetic field’s vertical structure and its continuation to sea level.

Additionally, the temporal resolution of satellite measurements must be matched appropriately with oceanographic data to ensure meaningful comparisons.

The statistical analysis of spatial correlations requires sophisticated techniques capable of distinguishing genuine relationships from spurious correlations that might arise from chance alone.

The spatial autocorrelation inherent in both magnetic field and oceanographic data complicates traditional statistical approaches, necessitating specialized methods such as spatial regression analysis and Monte Carlo significance testing.

Preliminary correlation studies have revealed intriguing patterns that support EGI predictions.

The centres of major oceanic gyres show statistically significant correlation with regions of enhanced magnetic field gradient and curvature.

The North Atlantic Gyre centre for instance corresponds closely with a region of complex magnetic field structure associated with the North American continental margin and the Mid Atlantic Ridge system.

Similarly the North Pacific Gyre aligns with magnetic anomalies related to the Pacific Ring of Fire and associated volcanic activity.

The temporal evolution of these correlations provides additional testing opportunities.

As satellite missions accumulate multi year datasets it becomes possible to examine how changes in magnetic field structure correspond to shifts in gyre position and intensity.

This temporal analysis is crucial for establishing causality rather than mere correlation as EGI predicts that magnetic field changes should precede corresponding oceanographic changes.

Deep Ocean Dynamics and Electromagnetic Coupling

The extension of EGI to deep ocean dynamics represents a particularly promising avenue for theoretical development and empirical testing.

Unlike surface circulation patterns which are subject to direct atmospheric forcing, deep ocean circulation depends primarily on density gradients, geothermal heating and other internal processes.

The electromagnetic forcing mechanism proposed by EGI provides a natural explanation for deep ocean circulation patterns that cannot be adequately explained by traditional approaches.

The electrical conductivity of seawater increases with depth due to increasing pressure and in many regions increasing temperature.

This depth dependence of conductivity creates a vertical profile of electromagnetic coupling strength that varies throughout the water column.

The deep ocean with its higher conductivity and relative isolation from atmospheric disturbances may actually provide more favourable conditions for electromagnetic forcing than the surface layers.

Deep ocean eddies and circulation patterns often exhibit characteristics that are difficult to explain through conventional mechanisms.

These include persistent anticyclonic and cyclonic eddies that maintain their structure for months or years, deep current systems that flow contrary to surface patterns and abyssal circulation patterns that appear to be anchored to specific geographical locations.

EGI provides a unifying framework for understanding these phenomena as manifestations of electromagnetic coupling between the deep ocean and the geomagnetic field.

The interaction between deep ocean circulation and the geomagnetic field may also provide insights into the coupling between oceanic and solid Earth processes.

The motion of conductive seawater through the magnetic field generates electric currents that extend into the underlying seafloor sediments and crustal rocks.

These currents may influence geochemical processes, mineral precipitation and even tectonic activity through electromagnetic effects on crustal fluids and melts.

Numerical Modeling and Computational Challenges

The incorporation of electromagnetic effects into global ocean circulation models presents significant computational challenges that require advances in both theoretical formulation and numerical methods.

Traditional ocean models are based on the primitive equations of fluid motion which must be extended to include electromagnetic body forces and the associated electrical current systems.

The magnetohydrodynamic equations governing electromagnetic coupling involve additional field variables including electric and magnetic field components, current density and electrical conductivity.

These variables are coupled to the fluid motion through nonlinear interaction terms that significantly increase the computational complexity of the problem.

The numerical solution of these extended equations requires careful attention to stability, accuracy and computational efficiency.

The spatial resolution requirements for electromagnetic ocean modelling are determined by the need to resolve magnetic field variations and current systems on scales ranging from global down to mesoscale eddies.

This multi scale character of the problem necessitates adaptive grid techniques or nested modelling approaches that can provide adequate resolution where needed while maintaining computational tractability.

The temporal resolution requirements are similarly challenging as electromagnetic processes occur on timescales ranging from seconds to millennia.

The electromagnetic response to fluid motion is essentially instantaneous on oceanographic timescales while the secular variation of the geomagnetic field occurs over decades to centuries.

This wide range of timescales requires sophisticated time stepping algorithms and careful consideration of the trade offs between accuracy and computational cost.

Validation of electromagnetic ocean models requires comparison with observational data at multiple scales and timescales.

This includes both large scale circulation patterns and local electromagnetic phenomena such as motional induction signals that can be measured directly.

The availability of high quality satellite magnetic field data provides an opportunity for comprehensive model validation that was not previously possible.

Planetary Science Applications and Extraterrestrial Oceans

The universality of electromagnetic processes makes EGI applicable to a wide range of planetary environments beyond Earth.

The discovery of subsurface oceans on several moons of Jupiter and Saturn has created new opportunities for understanding circulation patterns in extra terrestrial environments.

These ocean worlds, including Europa, Ganymede, Enceladus and Titan possess the key ingredients for electromagnetic gyre formation of conductive fluids and magnetic fields.

Europa in particular presents an ideal test case for EGI principles.

The moon’s subsurface ocean is in direct contact with Jupiter’s powerful magnetic field creating conditions that should strongly favour electromagnetic circulation patterns.

The interaction between Europa’s orbital motion and Jupiter’s magnetic field generates enormous electric currents that flow through the moon’s ocean potentially driving large scale circulation patterns that could be detected by future missions.

The magnetic field structures around gas giant planets differ significantly from Earth’s dipole field creating unique electromagnetic environments that should produce distinctive circulation patterns.

Jupiter’s magnetic field for instance exhibits complex multipole structure and rapid temporal variations that would create time dependent electromagnetic forcing in any conducting fluid body.

These variations provide natural experiments for testing EGI predictions in extreme environments.

The search for signs of life in extraterrestrial oceans may benefit from understanding electromagnetic circulation patterns.

Large scale circulation affects the distribution of nutrients, dissolved gases and other chemical species that are essential for biological processes.

The electromagnetic forcing mechanism may create more efficient mixing and transport processes than would be possible through purely thermal or tidal mechanisms, potentially enhancing the habitability of subsurface oceans.

Climate Implications and Earth System Interactions

The integration of electromagnetic effects into climate models represents a frontier with potentially profound implications for understanding Earth’s climate system.

Oceanic gyres play crucial roles in global heat transport, carbon cycling and weather pattern formation.

If these gyres are fundamentally controlled by electromagnetic processes then accurate climate modelling must account for the electromagnetic dimension of ocean dynamics.

The interaction between oceanic circulation and the geomagnetic field creates a feedback mechanism that couples the climate system to deep Earth processes.

Variations in the geomagnetic field driven by core dynamics and other deep Earth processes can influence oceanic circulation patterns and thereby affect climate on timescales ranging from decades to millennia.

This coupling provides a mechanism for solid Earth processes to influence climate through pathways that are not accounted for in current climate models.

The secular variation of the geomagnetic field including phenomena such as magnetic pole wandering and intensity variations may contribute to long term climate variability in ways that have not been previously recognized.

Historical records of magnetic field changes combined with paleoclimate data provide opportunities to test these connections and develop more comprehensive understanding of climate system behaviour.

The electromagnetic coupling between oceans and the geomagnetic field may also affect the carbon cycle through influences on ocean circulation patterns and deep water formation.

The transport of carbon dioxide and other greenhouse gases between surface and deep ocean depends critically on circulation patterns that may be fundamentally electromagnetic in origin.

Understanding these connections is essential for accurate prediction of future climate change and the effectiveness of carbon mitigation strategies.

Technological Applications and Innovation Opportunities

The practical applications of EGI extend beyond pure scientific understanding to encompass technological innovations and engineering applications.

The recognition that oceanic gyres are fundamentally electromagnetic phenomena opens new possibilities for energy harvesting, navigation enhancement and environmental monitoring.

Marine electromagnetic energy harvesting represents one of the most promising technological applications.

The large scale circulation of conductive seawater through the geomagnetic field generates enormous electric currents that in principle could be tapped for power generation.

The challenge lies in developing efficient methods for extracting useful energy from these naturally occurring electromagnetic phenomena without disrupting the circulation patterns themselves.

Navigation and positioning systems could benefit from improved understanding of electromagnetic gyre dynamics.

The correlation between magnetic field structure and ocean circulation patterns provides additional information that could enhance maritime navigation particularly in regions where GPS signals are unavailable or unreliable.

The predictable relationship between magnetic field changes and circulation pattern evolution could enable more accurate forecasting of ocean conditions for shipping and other maritime activities.

Environmental monitoring applications include the use of electromagnetic signatures to track pollution dispersion, monitor ecosystem health and detect changes in ocean circulation patterns.

The electromagnetic coupling between water motion and magnetic fields creates measurable signals that can be detected remotely, providing new tools for oceanographic research and environmental assessment.

Future Research Directions and Methodological Innovations

The development and validation of EGI requires coordinated research efforts across multiple disciplines and methodological approaches.

The interdisciplinary nature of the theory necessitates collaboration between physical oceanographers, geophysicists, plasma physicists and computational scientists to address the various aspects of electromagnetic ocean dynamics.

Observational research priorities include the deployment of integrated sensor networks that can simultaneously measure ocean circulation, magnetic field structure and electromagnetic phenomena.

These networks must be designed to provide both high spatial resolution and long term temporal coverage to capture the full range of electromagnetic coupling effects.

The development of new sensor technologies including autonomous underwater vehicles equipped with magnetometers and current meters will be essential for comprehensive data collection.

Laboratory research must focus on scaling relationships and the development of experimental techniques that can reproduce the essential features of oceanic electromagnetic coupling.

This includes the construction of large scale experimental facilities and the development of measurement techniques capable of detecting weak electromagnetic signals in the presence of background noise and interference.

Theoretical research should emphasize the development of more sophisticated magnetohydrodynamic models that can accurately represent the complex interactions between fluid motion, magnetic fields and electrical currents in realistic oceanic environments.

This includes the development of new mathematical techniques for solving the coupled system of equations and the investigation of stability, bifurcation and other dynamical properties of electromagnetic gyre systems.

Conclusion and Paradigm Transformation

The Electromagnetic Gyre Induction Theory represents a fundamental paradigm shift in our understanding of oceanic circulation and planetary fluid dynamics.

By recognizing the primary role of electromagnetic forces in gyre formation and maintenance EGI provides a unified framework for understanding phenomena that have long puzzled oceanographers and geophysicists.

The theory’s strength lies not only in its explanatory power but also in its testable predictions and potential for empirical validation.

The implications of EGI extend far beyond oceanography to encompass climate science, planetary science and our understanding of Earth as an integrated system.

The coupling between the geomagnetic field and oceanic circulation provides a mechanism for solid Earth processes to influence climate and surface conditions on timescales ranging from decades to millennia.

This coupling may help explain long term climate variability and provide insights into the Earth system’s response to external forcing.

The technological applications of EGI offer promising opportunities for innovation in energy harvesting, navigation and environmental monitoring.

The recognition that oceanic gyres are fundamentally electromagnetic phenomena opens new possibilities for practical applications that could benefit society while advancing our scientific understanding.

The validation of EGI requires a coordinated international research effort that combines laboratory experiments, observational studies and theoretical developments.

The theory’s falsifiability and specific predictions provide clear targets for experimental and observational testing ensuring that the scientific method can be applied rigorously to evaluate its validity.

Whether EGI is ultimately validated or refuted but its development has already contributed to scientific progress by highlighting the importance of electromagnetic processes in oceanic dynamics and by providing a framework for integrating diverse phenomena into a coherent theoretical structure.

The theory challenges the oceanographic community to reconsider fundamental assumptions about ocean circulation and to explore new avenues of research that may lead to breakthrough discoveries.

The electromagnetic perspective on oceanic circulation represents a return to the holistic view of Earth as an integrated system where solid Earth, fluid and electromagnetic processes are intimately coupled.

This perspective may prove essential for understanding the complex interactions that govern our planet’s behaviour and for developing the knowledge needed to address the environmental challenges of the 21st century and beyond.

Global Headquarters

RJV TECHNOLOGIES LTD

21 Lipton Road London United Kingdom E10 LJ

Company No: 11424986 | Status: Active

Type: Private Limited Company

Incorporated: 20 June 2018

Email: contact@rjvtechnologies.com

Phone: +44 (0)7583 118176

Branch: London (UK)

Ready to Transform Your Business?

Let’s discuss how RJV Technologies Ltd can help you achieve your business goals with cutting edge technology solutions.

Legal & Compliance

Registered in England & Wales | © 2025 RJV Technologies Ltd. All rights reserved.