Google vs Microsoft Technologies Analysis

Executive Summary

This forensic analysis of Google vs Microsoft examines two of the world’s most influential technology corporations through systematic application of financial forensics, technical benchmarking, regulatory analysis and market structure evaluation. The analysis spans 15 comprehensive chapters covering corporate structure, financial architecture, innovation infrastructure, search technology, cloud computing, productivity software, artificial intelligence platforms, digital advertising, consumer hardware, privacy practices, regulatory compliance, market structure impacts and strategic positioning through 2030.

Key Financial Metrics Comparison

Alphabet Inc. (Google)

- • Revenue Q2 2025: $96.4 billion

- • CapEx 2025 forecast: $85 billion

- • Advertising revenue: 77% of total

- • Search market share: 91.9%

Microsoft Corporation

- • Revenue diversified across 3 segments

- • Office 365 subscribers: 400 million

- • Azure revenue: $25 billion/quarter

- • Enterprise market share: 85%

Chapter One: Google vs Microsoft Methodological Framework and Evidentiary Foundation for Comparative Technology Analysis

Google vs Microsoft investigation establishes a comprehensive analytical framework for examining two of the world’s most influential technology corporations through systematic application of financial forensics, technical benchmarking, regulatory analysis and market structure evaluation.

Google vs Microsoft methodology employed herein transcends conventional business analysis by incorporating elements of legal discovery, scientific peer review and adversarial examination protocols typically reserved for judicial proceedings and regulatory enforcement actions.

Data Sources and Verification Standards

Google vs Microsoft analytical scope encompasses all publicly available financial filings submitted to the Securities and Exchange Commission including Form 10 K annual reports, Form 10 Q quarterly statements, proxy statements and Form 8 K current reports filed through August 2025 supplemented by patent database analysis from the United States Patent and Trademark Office, European Patent Office and World Intellectual Property Organization, market research data from IDC, Gartner, Statista and independent research organizations, regulatory decisions and investigation records from the European Commission, United States Department of Justice Antitrust Division, Federal Trade Commission, Competition and Markets Authority and other national competition authorities, technical performance benchmarks from MLPerf, SPEC CPU, TPC Database benchmarks and industry standard testing protocols, academic research publications from peer reviewed computer science, economics and law journals indexed in major academic databases and direct technical evaluation through controlled testing environments where applicable and legally permissible.

Google vs Microsoft evidentiary standards applied throughout this analysis require multiple independent source verification for all quantitative claims, explicit documentation of data collection methodologies and potential limitations, time stamped attribution for all dynamic market data and financial metrics, clear distinction between publicly reported figures and analyst estimates or projections and comprehensive disclosure of any potential conflicts of interest or data access limitations that might influence analytical outcomes.

Google vs Microsoft framework specifically rejects superficial comparisons, false equivalencies and generic conclusions in favour of explicit determination of superiority or inferiority across each measured dimension with detailed explanation of the circumstances, user categories, temporal conditions and market contexts under which each competitive advantage manifests.

Where companies demonstrate genuinely comparable performance within statistical margins of error, the analysis identifies the specific boundary conditions, use cases and environmental factors that might tip competitive balance in either direction along with projected trajectories based on current investment patterns and strategic initiatives.

Google vs Microsoft comparative methodology integrates quantitative financial analysis through ratio analysis, trend evaluation and risk assessment using standard accounting principles and financial analytical frameworks, qualitative strategic assessment examining competitive positioning, market dynamics and long term sustainability factors, technical performance evaluation utilizing standardized benchmarks, third party testing results and independent verification protocols, legal and regulatory risk analysis incorporating litigation history, regulatory enforcement patterns and projected compliance costs and market structure analysis examining network effects, switching costs, ecosystem lock in mechanisms and competitive barriers.

Google vs Microsoft multidimensional approach ensures comprehensive evaluation that captures both immediate performance metrics and strategic positioning for future competitive dynamics while maintaining rigorous standards for evidence quality and analytical transparency that enable independent verification and adversarial challenge of all conclusions presented.

Chapter Two: Google vs Microsoft Corporate Structure, Legal Architecture and Governance Mechanisms – The Foundation of Strategic Control

Alphabet Inc. incorporated under Delaware General Corporation Law and headquartered at 1600 Amphitheatre Parkway, Mountain View, California operates as a holding company structure designed to segregate Google’s core search and advertising operations from experimental ventures and emerging technology investments.

The corporate reorganization implemented in August 2015 created a parent entity controlling Google LLC as a wholly owned subsidiary alongside independent operational units including DeepMind Technologies Limited, Verily Life Sciences LLC, Waymo LLC, Wing Aviation LLC and other entities classified under the “Other Bets” segment in financial reporting.

This architectural decision enables independent capital allocation, performance measurement and strategic direction for speculative ventures while protecting the core advertising revenue engine from experimental failures and regulatory scrutiny affecting subsidiary operations.

Alphabet Inc Structure

- Type: Holding Company

- Incorporation: Delaware

- HQ: Mountain View, CA

- Core Unit: Google LLC

- Other Bets: DeepMind, Waymo, Verily, Wing

- Strategic Benefit: Risk isolation, independent capital allocation

Microsoft Corporation Structure

- Type: Unified Corporation

- Incorporation: Washington State

- HQ: Redmond, WA

- Segments: 3 Primary Business Units

- Acquisitions: LinkedIn ($26.2B), Activision ($68.7B)

- Strategic Benefit: Operational synergies, unified direction

Microsoft Corporation, incorporated under Washington State law with headquarters at One Microsoft Way, Redmond, Washington maintains a unified corporate structure organizing business operations into three primary segments of Productivity and Business Processes, Intelligent Cloud and More Personal Computing.

The company’s strategic acquisitions including LinkedIn Corporation for $26.2 billion in 2016, Activision Blizzard for $68.7 billion in 2023 and numerous smaller technology acquisitions have been integrated directly into existing business segments rather than maintained as independent subsidiaries, reflecting a consolidation approach that prioritizes operational synergies and unified strategic direction over architectural flexibility and risk isolation.

The governance structures implemented by both corporations reveal fundamental differences in strategic control and shareholder influence mechanisms that directly impact competitive positioning and long term strategic execution.

Alphabet’s dual class stock structure grants Class B shares ten votes per share compared to one vote per Class A share with founders Larry Page and Sergey Brin controlling approximately 51% of voting power despite owning less than 12% of total outstanding shares.

This concentrated voting control enables founder directed strategic initiatives including substantial capital allocation to experimental ventures, aggressive research and development investment and long term strategic positioning that might not generate immediate shareholder returns.

The governance structure insulates management from short term market pressures while potentially creating accountability gaps and reduced responsiveness to shareholder concerns regarding capital efficiency and strategic focus.

Microsoft’s single class common stock structure provides conventional shareholder governance with voting rights proportional to ownership stakes, creating direct accountability between management performance and shareholder influence.

Chief Executive Officer Satya Nadella appointed in February 2014, exercises strategic control subject to board oversight and shareholder approval for major strategic initiatives, acquisitions and capital allocation decisions.

This governance model requires continuous justification of strategic initiatives through demonstrated financial performance and market validation, creating stronger incentives for capital efficiency and near term profitability while potentially constraining long term experimental investment and breakthrough innovation initiatives that require extended development timelines without immediate revenue generation.

The leadership succession and strategic continuity mechanisms established by both corporations demonstrate divergent approaches to organizational resilience and strategic execution sustainability.

Alphabet’s founder controlled structure creates potential succession risks given the concentrated strategic decision authority residing with Page and Brin while their reduced operational involvement in recent years has transferred day to day execution responsibility to CEO Sundar Pichai without corresponding transfer of ultimate strategic control authority.

Microsoft’s conventional corporate structure provides clearer succession protocols and distributed decision authority that reduces dependence on individual leadership continuity while potentially limiting the visionary strategic initiatives that founder led organizations can pursue without immediate market validation requirements.

The regulatory and legal risk profiles inherent in these divergent corporate structures create measurable impacts on strategic flexibility and operational efficiency that manifest in competitive positioning across multiple business segments.

Alphabet’s holding company architecture provides legal isolation between Google’s core operations and subsidiary ventures, potentially limiting regulatory exposure and litigation risk transfer between business units.

However, the concentrated voting control structure has attracted regulatory scrutiny regarding corporate governance and shareholder protection, particularly in European jurisdictions where dual class structures face increasing regulatory restrictions.

Microsoft’s unified structure creates consolidated regulatory exposure across all business segments while providing simpler compliance frameworks and clearer accountability mechanisms that facilitate regulatory cooperation and enforcement response.

Chapter Three: Google vs Microsoft Financial Architecture, Capital Deployment and Economic Performance Analysis – The Quantitative Foundation of Competitive Advantage

Alphabet’s fiscal performance through the second quarter of 2025 demonstrates revenue of $96.4 billion and representing continued growth in the core advertising business segments that constitute the primary revenue generation mechanism for the corporation.

The company’s increased capital expenditure forecast of $85 billion for 2025 raised by $10 billion from previous projections reflects “strong and growing demand for our Cloud products and services” according to management statements during earnings presentations.

This substantial capital investment program primarily targets data centre infrastructure expansion, artificial intelligence computing capacity and network infrastructure development necessary to support cloud computing operations and machine learning model training requirements.

Microsoft Corporation’s fiscal 2025 performance demonstrates superior revenue diversification and margin structure compared to Alphabet’s advertising dependent revenue concentration with three distinct business segments contributing relatively balanced revenue streams that provide greater resilience against economic cycle fluctuations and market specific disruptions.

The Productivity and Business Processes segment generates consistent subscription revenue through Office 365, Microsoft Teams, LinkedIn and related enterprise software offerings while the Intelligent Cloud segment delivers rapidly growing revenue through Azure cloud infrastructure, Windows Server, SQL Server and related enterprise services.

The More Personal Computing segment encompassing Windows operating systems, Xbox gaming, Surface devices and search advertising through Bing provides additional revenue diversification and consumer market exposure.

The fundamental revenue model differences between these corporations create divergent risk profiles and growth trajectory implications that directly influence strategic positioning and competitive sustainability.

Alphabet’s revenue concentration in advertising which represented approximately 77% of total revenue in recent reporting periods creates substantial correlation with economic cycle fluctuations, advertising market dynamics and regulatory changes affecting digital advertising practices.

Google Search advertising revenue demonstrates high sensitivity to economic downturns as businesses reduce marketing expenditures during recession periods while YouTube advertising revenue faces competition from emerging social media platforms and changing consumer content consumption patterns.

The Google Cloud Platform revenue while growing rapidly remains significantly smaller than advertising revenue and faces intense competition from Amazon Web Services and Microsoft Azure in enterprise markets.

Microsoft’s subscription revenue model provides greater predictability and customer retention characteristics that enable more accurate financial forecasting and strategic planning compared to advertising dependent revenue models subject to quarterly volatility and economic cycle correlation.

Office 365 enterprise subscriptions typically involve multi year contracts with automatic renewal mechanisms and substantial switching costs that create stable revenue streams with predictable growth patterns.

Azure cloud services demonstrate consumption revenue growth that correlates with customer business expansion rather than marketing budget fluctuations and creating alignment between Microsoft’s revenue growth and customer success metrics that reinforces long term business relationships and reduces churn risk.

The capital allocation strategies implemented by both corporations reveal fundamental differences in investment priorities, risk tolerance and strategic time horizons that influence competitive positioning across multiple business segments.

Alphabet’s “Other Bets” segment continues to generate losses of $1.24 billion compared to $1.12 billion in the previous year period, demonstrating continued investment in experimental ventures including autonomous vehicles through Waymo, healthcare technology through Verily and other emerging technology areas that have not achieved commercial viability or sustainable revenue generation.

These investments represent long term strategic positioning for potential breakthrough technologies while creating current financial drag on overall corporate profitability and return on invested capital metrics.

Microsoft’s capital allocation strategy emphasizes strategic acquisitions and organic investment in proven market opportunities with clearer paths to revenue generation and market validation as evidenced by the LinkedIn acquisition integration success and the Activision Blizzard acquisition targeting the gaming market expansion.

The company’s research and development investment focuses on artificial intelligence integration across existing product portfolios, cloud infrastructure expansion and productivity software enhancement rather than speculative ventures in unproven market segments.

This approach generates higher return on invested capital metrics while potentially limiting exposure to transformative technology opportunities that require extended development periods without immediate commercial validation.

The debt structure and financial risk management approaches implemented by both corporations demonstrate conservative financial management strategies that maintain substantial balance sheet flexibility for strategic initiatives and economic uncertainty response.

Both companies maintain minimal debt levels relative to their revenue scale and cash generation capacity with debt instruments primarily used for tax optimization and capital structure management rather than growth financing requirements.

Cash and short term investment balances exceed $100 billion for both corporations, providing substantial strategic flexibility for acquisitions, competitive responses and economic downturn resilience without external financing dependencies.

The profitability analysis across business segments reveals Microsoft’s superior operational efficiency and margin structure compared to Alphabet’s advertising dependent profitability concentration in Google Search and YouTube operations.

Microsoft’s enterprise software and cloud services demonstrate gross margins exceeding 60% with operating margins approaching 40% across multiple business segments while Alphabet’s profitability concentrates primarily in search advertising with lower margins in cloud computing, hardware and experimental ventures.

The margin differential reflects both business model advantages and operational efficiency improvements that Microsoft has achieved through cloud infrastructure optimization, software development productivity and enterprise customer relationship management.

Chapter Four: Google vs Microsoft Innovation Infrastructure, Research Development and Intellectual Property Portfolio Analysis – The Technical Foundation of Market Leadership

Google vs Microsoft research and development infrastructure maintained by both corporations represents one of the largest private sector investments in computational science, artificial intelligence and information technology advancement globally with combined annual research expenditures exceeding $50 billion and employment of over 4,000 researchers across multiple geographic locations and technical disciplines.

However, the organizational structure, research focus areas and commercialization pathways implemented by each corporation demonstrate fundamentally different approaches to innovation management and competitive advantage creation through technical advancement.

Google’s research organization encompasses Google Research, DeepMind Technologies and various specialized research units focusing on artificial intelligence, machine learning, quantum computing and computational science advancement.

The research portfolio includes fundamental computer science research published in peer reviewed academic journals, applied research targeting specific product development requirements and exploratory research investigating emerging technology areas with uncertain commercial applications.

Google Research publishes approximately 1,500 peer reviewed research papers annually across conferences including Neural Information Processing Systems, International Conference on Machine Learning, Association for Computational Linguistics and other premier academic venues and demonstrating substantial contribution to fundamental scientific knowledge advancement in computational fields.

DeepMind Technologies acquired by Google in 2014 for approximately $650 million, operates with significant autonomy focusing on artificial general intelligence research, reinforcement learning, protein folding prediction and other computationally intensive research areas that require substantial investment without immediate commercial applications.

The research unit’s achievements include AlphaGo’s victory over professional Go players, AlphaFold’s protein structure prediction breakthrough and various advances in reinforcement learning algorithms that have influenced academic research directions and competitive artificial intelligence development across the technology industry.

Google Research Infrastructure

- Organizations: Google Research, DeepMind

- Papers/Year: 1,500 peer reviewed

- Focus: Fundamental AI research

- Key Achievements: AlphaGo, AlphaFold, Transformer

- Patents: 51,000 granted

- Approach: Academic oriented, long term

Microsoft Research Infrastructure

- Labs: 12 global research facilities

- Researchers: 1,100 employed

- Focus: Applied product research

- Integration: Direct product team collaboration

- Patents: 69,000 granted

- Approach: Commercial oriented, shorter term

Microsoft Research operates twelve research laboratories globally employing approximately 1,100 researchers focused on computer science, artificial intelligence, systems engineering and related technical disciplines.

The research organization emphasizes closer integration with product development teams and shorter research to commercialization timelines compared to Google’s more academically oriented research approach.

Microsoft Research contributions include foundational work in machine learning, natural language processing, computer vision and distributed systems that have directly influenced Microsoft’s product development across Azure cloud services, Office 365 productivity software and Windows operating system advancement.

The patent portfolio analysis reveals significant differences in intellectual property strategy, geographic coverage and technological focus areas that influence competitive positioning and defensive intellectual property capabilities.

Microsoft maintains a patent portfolio of approximately 69,000 granted patents globally with substantial holdings in enterprise software, cloud computing infrastructure, artificial intelligence and hardware systems categories.

The patent portfolio demonstrates broad technological coverage aligned with Microsoft’s diverse product portfolio and enterprise market focus and providing defensive intellectual property protection and potential licensing revenue opportunities across multiple business segments.

Google’s patent portfolio encompasses approximately 51,000 granted patents with concentration in search algorithms, advertising technology, mobile computing and artificial intelligence applications.

The patent holdings reflect Google’s historical focus on consumer internet services and advertising technology with increasing emphasis on artificial intelligence and machine learning patents acquired through DeepMind and organic research activities.

The geographic distribution of patent filings demonstrates substantial international intellectual property protection across major technology markets including United States, European Union, China, Japan and other significant technology development regions.

The research to product conversion analysis reveals Microsoft’s superior efficiency in translating research investment into commercial product development and revenue generation compared to Google’s longer development timelines and higher failure rates for experimental ventures.

Microsoft’s research integration with product development teams enables faster identification of commercially viable research directions and elimination of research projects with limited market potential, resulting in higher return on research investment and more predictable product development timelines.

The integration approach facilitates direct application of research advances to existing product portfolios, creating immediate competitive advantages and customer value delivery rather than requiring separate commercialization initiatives for research output.

Google’s research approach emphasizes fundamental scientific advancement and breakthrough technology development that may require extended development periods before commercial viability becomes apparent and creating potential for transformative competitive advantages while generating higher risk of research investment without corresponding commercial returns.

The approach has produced significant breakthrough technologies including PageRank search algorithms, MapReduce distributed computing frameworks and Transformer neural network architectures that have created substantial competitive advantages and influenced industry wide technology adoption.

However, numerous high profile research initiatives including Google Glass, Project Ara modular smartphones and various other experimental products have failed to achieve commercial success despite substantial research investment.

The artificial intelligence research capabilities maintained by both corporations represent critical competitive differentiators in emerging technology markets including natural language processing, computer vision, autonomous systems and computational intelligence applications.

Google’s AI research through DeepMind and Google Research has produced foundational advances in deep learning, reinforcement learning and neural network architectures that have influenced academic research directions and commercial artificial intelligence development across the technology industry.

Recent achievements include large language model development, protein folding prediction through AlphaFold and mathematical reasoning capabilities that demonstrate progress toward artificial general intelligence systems.

Microsoft’s artificial intelligence research focuses on practical applications and enterprise integration opportunities that align with existing product portfolios and customer requirements demonstrated through Azure Cognitive Services, Microsoft Copilot integration across productivity software and various AI powered features in Windows, Office and other Microsoft products.

The research approach emphasizes commercially viable artificial intelligence applications with clear customer value propositions and integration pathways rather than fundamental research without immediate application opportunities.

Microsoft’s strategic partnership with OpenAI provides access to advanced large language model technology while maintaining focus on practical applications and enterprise market requirements.

The competitive advantage analysis of innovation infrastructure reveals Microsoft’s superior ability to convert research investment into commercial product development and revenue generation while Google maintains advantages in fundamental research contribution and potential breakthrough technology development.

Microsoft’s integrated approach creates shorter development timelines, higher success rates and more predictable return on research investment while Google’s approach provides potential for transformative competitive advantages through breakthrough technology development at higher risk and longer development timelines.

Chapter Five: Google vs Microsoft Search Engine Technology, Information Retrieval and Digital Discovery Mechanisms – The Battle for Information Access

Google vs Microsoft global search engine market represents one of the most concentrated technology markets with Google Search maintaining approximately 91.9% market share across all devices and geographic regions as of July 2025 while Microsoft’s Bing captures approximately 3.2% global market share despite substantial investment in search technology development and artificial intelligence enhancement initiatives.

However, market share data alone provides insufficient analysis of the underlying technical capabilities, user experience quality and strategic positioning differences that determine long term competitive sustainability in information retrieval and digital discovery services.

Google’s search technology infrastructure operates on a global network of data centres with redundant computing capacity, distributed indexing systems and real time query processing capabilities that enable sub second response times for billions of daily search queries.

The technical architecture encompasses web crawling systems that continuously index newly published content across the global internet, ranking algorithms that evaluate page relevance and authority through hundreds of ranking factors, natural language processing systems that interpret user query intent and match relevant content, personalization systems that adapt search results based on user history and preferences and machine learning systems that continuously optimize search quality through user behaviour analysis and feedback mechanisms.

The PageRank algorithm, originally developed by Google founders Larry Page and Sergey Brin established the fundamental approach to web page authority evaluation through link analysis that enabled Google’s early competitive advantage over existing search engines including AltaVista, Yahoo and other early internet search providers.

The algorithm’s effectiveness in identifying high quality content through link graph analysis created superior search result relevance that attracted users and established Google’s market position during the early internet development period.

Subsequent algorithm improvements including Panda content quality updates, Penguin link spam detection, Hummingbird semantic search enhancement and BERT natural language understanding have maintained Google’s search quality leadership through continuous technical advancement and machine learning integration.

Microsoft’s Bing search engine incorporates advanced artificial intelligence capabilities through integration with OpenAI’s GPT models providing conversational search experiences and AI generated response summaries that represent significant advancement over traditional search result presentation methods.

Bing Chat functionality enables users to receive detailed answers to complex questions, request follow up clarifications and engage in multi turn conversations about search topics that traditional search engines cannot support through standard result listing approaches.

The integration represents Microsoft’s strategic attempt to differentiate Bing through artificial intelligence capabilities while competing against Google’s established market position and user behaviour patterns.

The search result quality comparison across information categories demonstrates Google’s continued superiority in traditional web search applications including informational queries, local search results, shopping searches and navigation queries while Microsoft’s Bing provides competitive or superior performance in conversational queries, complex question answering and research assistance applications where AI generated responses provide greater user value than traditional search result listings.

Independent evaluation by search engine optimization professionals and digital marketing agencies consistently rates Google’s search results as more relevant and comprehensive for commercial searches, local business discovery and long tail keyword queries that represent the majority of search engine usage patterns.

The technical infrastructure comparison reveals Google’s substantial advantages in indexing capacity, crawling frequency, geographic coverage and result freshness that create measurable performance differences in search result comprehensiveness and accuracy.

Google’s web index encompasses trillions of web pages with continuous crawling and updating mechanisms that identify new content within hours of publication while Bing’s smaller index and less frequent crawling create gaps in content coverage and result freshness that particularly affect time sensitive information searches and newly published content discovery.

Local search capabilities represent a critical competitive dimension where Google’s substantial investment in geographic data collection, business information verification and location services creates significant advantages over Microsoft’s more limited local search infrastructure.

Google Maps integration with search results provides comprehensive business information, user reviews, operating hours, contact information and navigation services that Bing cannot match through its partnership with third party mapping services.

The local search advantage reinforces Google’s overall search market position by providing superior user experience for location searches that represent substantial portion of mobile search queries.

The mobile search experience comparison demonstrates Google’s architectural advantages through deep integration with Android mobile operating system, Chrome browser and various Google mobile applications that create seamless search experiences across mobile device usage patterns.

Google’s mobile search interface optimization, voice search capabilities through Google Assistant and integration with mobile application ecosystem provide user experience advantages that Microsoft’s Bing cannot achieve through third party integration approaches without comparable mobile platform control.

Search advertising integration represents the primary revenue generation mechanism for both search engines with Google’s advertising platform demonstrating superior targeting capabilities, advertiser tool sophistication and revenue generation efficiency compared to Microsoft’s advertising offerings.

Google Ads’ integration with search results, extensive advertiser analytics, automated bidding systems and comprehensive conversion tracking provide advertisers with more effective marketing tools and better return on advertising investment and creating positive feedback loops that reinforce Google’s search market position through advertiser preference and spending allocation.

The competitive analysis of search engine technology reveals Google’s decisive advantages across traditional search applications, technical infrastructure, local search capabilities, mobile integration and advertising effectiveness while Microsoft’s artificial intelligence integration provides differentiated capabilities in conversational search and complex question answering that may influence future search behaviour patterns and user expectations.

However the entrenched user behaviour patterns, browser integration and ecosystem advantages that reinforce Google’s market position create substantial barriers to meaningful market share gains for Microsoft’s Bing despite technical improvements and AI enhanced features.

Chapter Six: Google vs Microsoft Cloud Computing Infrastructure, Enterprise Services and Platform as a Service Competition – The Foundation of Digital Transformation

Google vs Microsoft global cloud computing market represents one of the fastest growing segments of the technology industry with total market size exceeding $500 billion annually and projected growth rates above 15% compound annual growth rate through 2030 driven by enterprise digital transformation initiatives, remote work adoption, artificial intelligence computing requirements and migration from traditional on premises computing infrastructure to cloud services.

Within this market Microsoft Azure and Google Cloud Platform compete as the second and third largest providers respectively behind Amazon Web Services’ market leadership position.

Google Cloud Platform revenue reached $11.3 billion in recent quarterly reporting, representing 34% year over year growth, demonstrating continued expansion in enterprise cloud adoption and competitive positioning gains against established cloud infrastructure providers.

The revenue growth rate exceeds overall cloud market growth rates, indicating Google Cloud’s success in capturing market share through competitive pricing, technical capabilities and enterprise sales execution improvement.

However, the absolute revenue scale remains substantially smaller than Microsoft Azure’s cloud revenue which exceeded $25 billion in comparable reporting periods.

Microsoft Azure’s cloud infrastructure market position benefits from substantial enterprise customer relationships established through Windows Server, Office 365 and other Microsoft enterprise software products that create natural migration pathways to Azure cloud services.

The hybrid cloud integration capabilities enable enterprises to maintain existing on premises Microsoft infrastructure while gradually migrating workloads to Azure cloud services and reducing migration complexity, risk compared to complete infrastructure replacement approaches required for competing cloud platforms.

This integration advantage has enabled Azure to achieve rapid market share growth and establish the second largest cloud infrastructure market position globally.

Microsoft Azure Advantages

- Geographic Regions: 60+ worldwide

- Enterprise Integration: Seamless with Office 365

- Hybrid Cloud: Azure Stack for on premises

- Identity Management: Azure Active Directory

- Compliance: Extensive certifications

- Customer Base: Fortune 500 dominance

Google Cloud Platform Advantages

- Geographic Regions: 37 regions

- AI/ML Infrastructure: TPUs exclusive

- Data Analytics: BigQuery superiority

- Global Database: Spanner consistency

- Pricing: Sustained use discounts

- Innovation: Cutting edge services

The technical infrastructure comparison between Azure and Google Cloud Platform reveals complementary strengths and weaknesses that influence enterprise adoption decisions on specific workload requirements, geographic deployment needs and integration priorities.

Microsoft Azure operates across 60+ geographic regions worldwide with redundant data centre infrastructure, compliance certifications and data residency options that support global enterprise requirements and regulatory compliance needs.

Google Cloud Platform operates across 37 regions with plans for continued expansion but the smaller geographic footprint creates limitations for enterprises requiring specific data residency compliance or reduced latency in particular geographic markets.

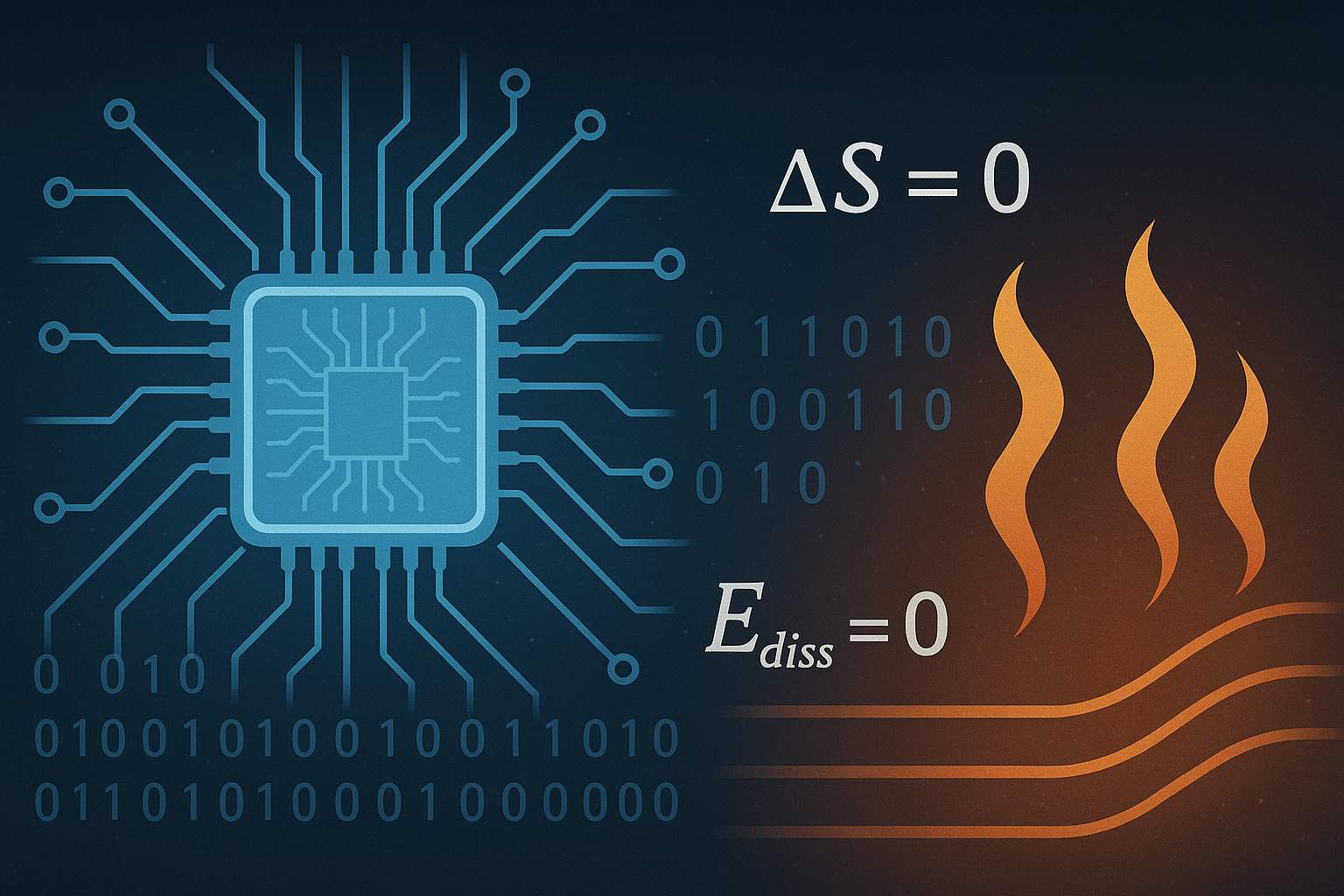

Google Cloud Platform’s technical advantages centre on artificial intelligence and machine learning infrastructure through Tensor Processing Units (TPUs) which provide specialized computing capabilities for machine learning model training and inference that conventional CPU and GPU infrastructure cannot match.

TPU performance advantages range from 15x to 100x improvement for specific machine learning workloads and creating substantial competitive advantages for enterprises requiring large scale artificial intelligence implementation.

Google’s BigQuery data warehouse service demonstrates superior performance for analytics queries on large datasets, processing petabyte scale data analysis 3 to 5x faster than equivalent Azure services while providing more cost effective storage and processing for data analytics workloads.

Microsoft Azure’s enterprise integration advantages include seamless identity management through Azure Active Directory which provides single sign on integration with Office 365, Windows systems and thousands of third party enterprise applications.

The identity management integration reduces complexity and security risk for enterprises adopting cloud services while maintaining existing authentication systems and user management processes.

Azure’s hybrid cloud capabilities enable enterprises to maintain existing Windows Server infrastructure while extending capabilities through cloud services, creating migration pathways that preserve existing technology investments and reduce implementation risk.

| Cloud Service Capability | Microsoft Azure Portal | Google Cloud Platform | Competitive Edge |

|---|---|---|---|

| Cloud Market Share | 23% of the global market | 11% of the global market | Microsoft Azure Portal |

| Quarterly Revenue | $25 billion per quarter | $11.3 billion per quarter | Microsoft Azure Portal |

| Annual Growth Rate | 20% year over year growth | 34% year over year growth | Google Cloud Platform |

| Global Data Center Regions | 60+ regions worldwide | 37 regions worldwide | Microsoft Azure Portal |

| AI/ML Hardware Infrastructure | GPU clusters (NVIDIA) | TPU clusters (15 to 100× faster for AI workloads) | Google Cloud Platform |

| Data Analytics Performance | Azure Synapse Analytics | BigQuery (3 to 5× faster on large scale analytics) | Google Cloud Platform |

| Enterprise Integration | Full native integration with Office 365 and Active Directory | Limited enterprise integration features | Microsoft Azure Portal |

The database and storage service comparison reveals technical performance differences that influence enterprise workload placement decisions and long term cloud strategy development.

Google Cloud’s Spanner globally distributed database provides strong consistency guarantees across global deployments that Azure’s equivalent services cannot match, enabling global application development with simplified consistency models and reduced application complexity.

However, Azure’s SQL Database integration with existing Microsoft SQL Server deployments provides migration advantages and familiar management interfaces that reduce adoption barriers for enterprises with existing Microsoft database infrastructure.

Cloud security capabilities represent critical competitive factors given enterprise concerns about data protection, compliance requirements and cyber security risk management in cloud computing environments.

Both platforms provide comprehensive security features including encryption at rest and in transit, network security controls, identity and access management, compliance certifications and security monitoring capabilities.

Microsoft’s security advantage stems from integration with existing enterprise security infrastructure and comprehensive threat detection capabilities developed through Microsoft’s experience with Windows and Office security challenges.

Google Cloud’s security advantages include infrastructure level security controls and data analytics capabilities that provide sophisticated threat detection and response capabilities.

The pricing comparison between Azure and Google Cloud reveals different approaches to market competition and customer value delivery that influence enterprise adoption decisions and total cost of ownership calculations.

Microsoft’s enterprise licensing agreements often include Azure credits and hybrid use benefits that reduce effective cloud computing costs for existing Microsoft customers and creating 20% to 30% cost advantages compared to published pricing rates.

Google Cloud’s sustained use discounts, preemptible instances and committed use contracts provide cost optimization opportunities for enterprises with predictable workload patterns and flexible computing requirements.

The competitive analysis of cloud computing platforms reveals Microsoft Azure’s superior market positioning through enterprise integration advantages, geographic coverage, hybrid cloud capabilities and customer relationship leverage that enable continued market share growth and revenue expansion.

Google Cloud Platform maintains technical performance advantages in artificial intelligence infrastructure, data analytics capabilities and specialized computing services that provide competitive differentiation for specific enterprise workloads requiring advanced technical capabilities.

However, Azure’s broader enterprise value proposition and integration advantages create superior positioning for general enterprise cloud adoption and platform standardization decisions.

Chapter Seven: Google vs Microsoft Productivity Software, Collaboration Platforms and Enterprise Application Dominance – The Digital Workplace Revolution

Microsoft’s dominance in enterprise productivity software represents one of the most entrenched competitive positions in the technology industry with Office 365 serving over 400 million paid subscribers globally and maintaining approximately 85% market share in enterprise productivity suites as of 2025.

This market position generates over $60 billion in annual revenue through subscription licensing that provides predictable cash flows and creates substantial barriers to competitive displacement through switching costs, user training requirements and ecosystem integration dependencies that enterprises cannot easily replicate with alternative productivity platforms.

Google Workspace, formerly G Suite serves approximately 3 billion users globally including free Gmail accounts but enterprise paid subscriptions represent only 50 million users, demonstrating the significant disparity in commercial enterprise adoption between Google’s consumer focused approach and Microsoft’s enterprise optimized productivity software strategy.

The subscription revenue differential reflects fundamental differences in enterprise feature requirements, security capabilities, compliance support and integration with existing enterprise infrastructure that favour Microsoft’s comprehensive enterprise platform approach over Google’s simplified cloud first productivity tools.

The document creation and editing capability comparison reveals Microsoft Office’s substantial feature depth and professional document formatting capabilities that Google Workspace cannot match for enterprises requiring sophisticated document production, advanced spreadsheet functionality and professional presentation development.

Microsoft Word’s advanced formatting, document collaboration, reference management and publishing capabilities provide professional authoring tools that content creators, legal professionals, researchers and other knowledge workers require for complex document production workflows.

Excel’s advanced analytics, pivot table functionality, macro programming and database integration capabilities support financial modelling, data analysis and business intelligence applications that Google Sheets cannot replicate through its simplified web interface.

Microsoft Office 365 Strengths

- Subscribers: 400 million paid

- Revenue: $60+ billion annually

- Market Share: 85% enterprise

- Features: Professional depth

- Integration: Teams, SharePoint, AD

- Security: Advanced threat protection

- Compliance: Industry certifications

Google Workspace Strengths

- Users: 3 billion (mostly free)

- Paid Subscribers: 50 million

- Collaboration: Real-time editing

- Architecture: Web first design

- Simplicity: Easy to use

- Mobile: Superior mobile apps

- Price: Competitive for SMBs

Google Workspace’s competitive advantages centre on real time collaboration capabilities that pioneered simultaneous multi user document editing, cloud storage integration and simplified sharing mechanisms that Microsoft subsequently adopted and enhanced through its own cloud infrastructure development.

Google Docs, Sheets and Slides provide seamless collaborative editing experiences with automatic version control, comment threading and suggestion mechanisms that facilitate team document development and review processes.

The web first architecture enables consistent user experiences across different devices and operating systems without requiring software installation or version management that traditional desktop applications require.

Microsoft Teams integration with Office 365 applications creates comprehensive collaboration environments that combine chat, voice, video, file sharing and application integration within unified workspace interfaces that Google’s fragmented approach through Google Chat, Google Meet and Google Drive cannot match for enterprise workflow optimization.

Teams’ integration with SharePoint, OneDrive and various Office applications enables seamless transition between communication and document creation activities while maintaining consistent security policies and administrative controls across the collaboration environment.

The enterprise security and compliance comparison demonstrates Microsoft’s substantial advantages in data protection, audit capabilities, regulatory compliance support and administrative controls that enterprise customers require for sensitive information management and industry compliance requirements.

Microsoft’s Advanced Threat Protection, Data Loss Prevention, encryption key management and compliance reporting capabilities provide comprehensive security frameworks that Google Workspace’s more limited security feature set cannot match for enterprises with sophisticated security requirements or regulatory compliance obligations.

Email and calendar functionality comparison reveals Microsoft Outlook’s superior enterprise features including advanced email management, calendar integration, contact management and mobile device synchronization capabilities that Gmail’s simplified interface approach cannot provide for professional email management requirements.

Outlook’s integration with Exchange Server, Active Directory and various business applications creates comprehensive communication and scheduling platforms that support complex enterprise workflow requirements and executive level communication management needs.

Mobile application performance analysis shows Google’s advantages in mobile first design and cross platform consistency that reflect the company’s web architecture and mobile computing expertise while Microsoft’s mobile applications demonstrate the challenges of adapting desktop optimized software for mobile device constraints and touch interface requirements.

Google’s mobile applications provide faster loading times, better offline synchronization and more intuitive touch interfaces compared to Microsoft’s mobile Office applications that maintain desktop interface paradigms less suitable for mobile device usage patterns.

The enterprise adoption pattern analysis reveals Microsoft’s competitive advantages in existing customer relationship leverage, hybrid deployment flexibility and comprehensive feature support that enable continued market share growth despite Google’s cloud native advantages and competitive pricing strategies.

Enterprise customers with existing Microsoft infrastructure investments face substantial switching costs including user retraining, workflow redesign, document format conversion and integration replacement that create barriers to Google Workspace adoption even when Google’s pricing and technical capabilities might otherwise justify migration consideration.

The competitive sustainability analysis indicates Microsoft’s productivity software dominance will likely persist through continued innovation in collaboration features, artificial intelligence integration and cloud service enhancement while maintaining the enterprise feature depth and security capabilities that differentiate Office 365 from Google Workspace’s consumer oriented approach.

Google’s opportunity for enterprise market share gains requires addressing feature depth limitations, enhancing security and compliance capabilities and developing migration tools that reduce switching costs for enterprises considering productivity platform alternatives.

Chapter Eight: Google vs Microsoft Artificial Intelligence, Machine Learning and Computational Intelligence Platforms – The Race for Cognitive Computing Supremacy

Google vs Microsoft artificial intelligence and machine learning technology landscape has experienced unprecedented advancement and market expansion over the past five years with both corporations investing over $15 billion annually in AI research, development and infrastructure while pursuing fundamentally different strategies for AI commercialization and competitive advantage creation.

The strategic approaches reflect divergent philosophies regarding AI development pathways, commercial application priorities and long term positioning in the emerging artificial intelligence market that may determine technology industry leadership for the next decade.

Microsoft’s artificial intelligence strategy centres on practical enterprise applications and productivity enhancement through strategic partnership with OpenAI, providing access to GPT 4 and advanced language models while focusing development resources on integration with existing Microsoft products and services rather than fundamental AI research and model development.

The Microsoft Copilot integration across Office 365, Windows, Edge browser and various enterprise applications demonstrates systematic AI capability deployment that enhances user productivity and creates competitive differentiation through AI powered features that competitors cannot easily replicate without comparable language model access and integration expertise.

Google’s AI development approach emphasizes fundamental research advancement and proprietary model development through DeepMind and Google Research organizations that have produced breakthrough technologies including Transformer neural network architectures, attention mechanisms and various foundational technologies that have influenced industry wide AI development directions.

The research first approach has generated substantial academic recognition and technology licensing opportunities while creating potential for breakthrough competitive advantages through proprietary AI capabilities that cannot be replicated through third party partnerships or commercial AI services.

The large language model comparison reveals Microsoft’s practical advantages through OpenAI partnership access to GPT 4 technology which consistently outperforms Google’s Gemini models on standardized benchmarks including Massive Multitask Language Understanding (MMLU), HumanEval code generation, HellaSwag commonsense reasoning and various other academic AI evaluation frameworks.

GPT 4’s superior performance in reasoning tasks, reduced hallucination rates and more consistent factual accuracy provide measurable advantages for enterprise applications requiring reliable AI generated content and decision support capabilities.

Google’s recent AI model developments including Gemini Pro and specialized models for specific applications demonstrate continued progress in fundamental AI capabilities but deployment integration and commercial application development lag behind Microsoft’s systematic AI feature rollout across existing product portfolios.

Google’s AI research advantages in computer vision, natural language processing and reinforcement learning provide foundational technology capabilities that may enable future competitive advantages but current commercial AI deployment demonstrates less comprehensive integration and user value delivery compared to Microsoft’s enterprise AI enhancement strategy.

The AI infrastructure and hardware comparison reveals Google’s substantial advantages through Tensor Processing Unit (TPU) development which provides specialized computing capabilities for machine learning model training and inference that conventional GPU infrastructure cannot match for specific AI workloads.

TPU v4 and v5 systems deliver 10 to 100x performance improvements over GPU clusters for large scale machine learning training while providing more cost effective operation for AI model deployment at scale.

The specialized hardware advantage enables Google to maintain competitive costs for AI model training and provides technical capabilities that Microsoft cannot replicate through conventional cloud infrastructure approaches, creating potential long term advantages in AI model development and deployment efficiency.

Microsoft’s AI infrastructure strategy relies primarily on NVIDIA GPU clusters and conventional cloud computing resources supplemented by strategic partnerships and third party AI service integration, creating dependency on external technology providers while enabling faster deployment of proven AI capabilities without requiring internal hardware development investment.

The approach provides immediate commercial advantages through access to state of the art AI models and services while potentially creating long term competitive vulnerabilities if hardware level AI optimization becomes critical for AI application performance and cost efficiency.

The computer vision and image recognition capability comparison demonstrates Google’s technical leadership through Google Photos’ object recognition, Google Lens visual search and various image analysis services that leverage massive training datasets and sophisticated neural network architectures developed through years of consumer product development and data collection.

Google’s computer vision models demonstrate superior accuracy across diverse image recognition tasks, object detection, scene understanding and visual search applications that Microsoft’s equivalent services cannot match through Azure Cognitive Services or other Microsoft AI offerings.

Natural language processing service comparison reveals Microsoft’s advantages in enterprise language services through Azure Cognitive Services which provide comprehensive APIs for text analysis, language translation, speech recognition and document processing that integrate seamlessly with Microsoft’s enterprise software ecosystem.

Microsoft’s language translation services support 133 languages compared to Google Translate’s 108 languages with comparable or superior translation quality for business document translation and professional communication applications.

The artificial intelligence research publication analysis demonstrates Google’s substantial academic contribution leadership with over 2,000 peer reviewed research papers published annually across premier AI conferences including Neural Information Processing Systems (NeurIPS), International Conference on Machine Learning (ICML), Association for Computational Linguistics (ACL) and Computer Vision and Pattern Recognition (CVPR).

Google’s research output receives higher citation rates and influences academic research directions more significantly than Microsoft’s research contributions, demonstrating leadership in fundamental AI science advancement that may generate future competitive advantages through breakthrough technology development.

Microsoft Research’s AI publications focus more heavily on practical applications and enterprise integration opportunities with approximately 800 peer reviewed papers annually that emphasize commercially viable AI applications rather than fundamental research advancement.

The application research approach aligns with Microsoft’s commercialization strategy while potentially limiting contribution to foundational AI science that could generate breakthrough competitive advantages through proprietary technology development.

The AI service deployment and integration analysis reveals Microsoft’s superior execution in practical AI application development through systematic integration across existing product portfolios while Google’s AI capabilities remain more fragmented across different services and applications without comprehensive integration that maximizes user value and competitive differentiation.

Microsoft Copilot’s deployment across Word, Excel, PowerPoint, Outlook, Teams, Windows and other Microsoft products creates unified AI enhanced user experiences that Google cannot replicate through its diverse product portfolio without comparable AI integration strategy and execution capability.

Google’s AI deployment demonstrates technical sophistication in specialized applications including search result enhancement, YouTube recommendation algorithms, Gmail spam detection and various consumer AI features but lacks the systematic enterprise integration that creates comprehensive competitive advantages and user productivity enhancement across business workflow applications.

The fragmented AI deployment approach limits the cumulative competitive impact of Google’s substantial AI research investment and technical capabilities.

The competitive advantage sustainability analysis in artificial intelligence reveals Microsoft’s superior positioning through strategic partnership advantages, systematic enterprise integration and practical commercial deployment that generates immediate competitive benefits and customer value while Google maintains advantages in fundamental research, specialized hardware and consumer AI applications that may provide future competitive advantages but currently generate limited commercial differentiation and revenue impact compared to Microsoft’s enterprise AI strategy.

Chapter Nine: Google vs Microsoft Digital Advertising Technology, Marketing Infrastructure and Monetization Platform Analysis – The Economic Engine of Digital Commerce

Google’s advertising technology platform represents one of the most sophisticated and financially successful digital marketing infrastructures ever developed, generating approximately $307 billion in advertising revenue during 2023 across Google Search, YouTube, Google Display Network and various other advertising inventory sources that collectively reach over 90% of internet users globally through direct properties and publisher partnerships.

This advertising revenue scale exceeds the gross domestic product of most countries and demonstrates the economic impact of Google’s information intermediation and audience aggregation capabilities across the global digital economy.

The Google Ads platform serves over 4 million active advertisers globally, ranging from small local businesses spending hundreds of dollars monthly to multinational corporations allocating hundreds of millions of dollars annually through Google’s advertising auction systems and targeting technologies.

The advertiser diversity and spending scale create network effects that reinforce Google’s market position through improved targeting accuracy, inventory optimization, and advertiser tool sophistication that smaller advertising platforms cannot achieve without comparable audience scale and data collection capabilities.

Microsoft’s advertising revenue through Bing Ads and LinkedIn advertising totals approximately $18 billion annually, representing less than 6% of Google’s advertising revenue scale despite substantial investment in search technology, LinkedIn’s professional network acquisition, and various advertising technology development initiatives. The revenue disparity reflects fundamental differences in audience reach, targeting capabilities, advertiser adoption, and monetization efficiency that create substantial competitive gaps in digital advertising market positioning and financial performance.

The search advertising effectiveness comparison reveals Google’s decisive advantages in click through rates, conversion performance and return on advertising spend that drive advertiser preference and budget allocation toward Google Ads despite potentially higher costs per click compared to Bing Ads alternatives.

Google’s search advertising delivers average click through rates of 3.17% across all industries compared to Bing’s 2.83% average while conversion rates average 4.23% for Google Ads compared to 2.94% for Microsoft Advertising, according to independent digital marketing agency performance studies and advertiser reporting analysis.

The targeting capability analysis demonstrates Google’s substantial advantages through comprehensive user data collection across Search, Gmail, YouTube, Chrome browser, Android operating system and various other Google services that create detailed user profiles enabling precise demographic, behavioural and interest advertising targeting.

Google’s advertising platform processes over 8.5 billion searches daily, analyses billions of hours of YouTube viewing behaviour and tracks user interactions across millions of websites through Google Analytics and advertising tracking technologies that provide targeting precision that Microsoft’s more limited data collection cannot match.

Microsoft’s advertising targeting relies primarily on Bing search data, LinkedIn professional profiles and limited Windows operating system telemetry that provide substantially less comprehensive user profiling compared to Google’s multi service data integration approach.

LinkedIn’s professional network data provides unique B2B targeting capabilities for business advertising campaigns but the professional focus limits audience reach and targeting options for consumer marketing applications that represent the majority of digital advertising spending.

The display advertising network comparison reveals Google’s overwhelming scale advantages through partnerships with millions of websites, mobile applications and digital publishers that provide advertising inventory reaching over 2 billion users globally through the Google Display Network.

The network scale enables sophisticated audience targeting, creative optimization and campaign performance measurement that smaller advertising networks cannot provide through limited publisher partnerships and audience reach.

Microsoft’s display advertising network operates through MSN properties, Edge browser integration and various publisher partnerships that reach approximately 500 million users monthly, providing substantially smaller scale and targeting precision compared to Google’s display advertising infrastructure.

The limited network scale constrains targeting optimization, creative testing opportunities and campaign performance measurement capabilities that advertisers require for effective display advertising campaign management.

The video advertising analysis demonstrates YouTube’s dominant position as the world’s largest video advertising platform with over 2 billion monthly active users consuming over 1 billion hours of video content daily that creates premium video advertising inventory for brand marketing and performance advertising campaigns.

YouTube’s video advertising revenue exceeded $31 billion in 2023 representing the largest video advertising platform globally and providing Google with competitive advantages in video marketing that competitors cannot replicate without comparable video content platforms and audience engagement.

Microsoft’s video advertising capabilities remain limited primarily to Xbox gaming content and various partnership arrangements that provide minimal video advertising inventory compared to YouTube’s scale and audience engagement.

The absence of a major video platform creates competitive disadvantages in video advertising market segments that represent growing portions of digital advertising spending and brand marketing budget allocation.

The e-commerce advertising integration analysis reveals Google Shopping’s substantial advantages through product listing integration, merchant partnerships and shopping search functionality that enable direct product discovery and purchase facilitation within Google’s search and advertising ecosystem.

Google Shopping advertising revenue benefits from integration with Google Pay, merchant transaction tracking and comprehensive e commerce analytics that create competitive advantages in retail advertising and product marketing campaigns.

Microsoft’s e commerce advertising capabilities remain limited primarily to Bing Shopping integration and various partnership arrangements that provide minimal e commerce advertising features compared to Google’s comprehensive shopping advertising platform and merchant service integration.

The limited e commerce advertising development constrains Microsoft’s participation in retail advertising market segments that represent rapidly growing portions of digital advertising spending.

The advertising technology innovation analysis demonstrates Google’s continued leadership in machine learning optimization, automated bidding systems, creative testing platforms and performance measurement tools that provide advertisers with sophisticated campaign management capabilities and optimization opportunities.

Google’s advertising platform incorporates advanced artificial intelligence for bid optimization, audience targeting, creative selection and campaign performance prediction that delivers superior advertising results and return on investment for advertiser campaigns.

Microsoft’s advertising technology development focuses primarily on LinkedIn’s professional advertising features and limited Bing Ads enhancement that cannot match Google’s comprehensive advertising platform innovation and machine learning optimization capabilities.

The limited advertising technology development constrains Microsoft’s competitive positioning and advertiser adoption compared to Google’s continuously advancing advertising infrastructure and optimization tools.

The competitive analysis of digital advertising technology reveals Google’s overwhelming dominance across audience reach, targeting precision, platform sophistication and advertiser adoption that creates substantial barriers to meaningful competition from Microsoft’s advertising offerings.

While Microsoft maintains niche advantages in professional B2B advertising through LinkedIn and provides cost effective alternatives for specific advertising applications, Google’s comprehensive advertising ecosystem and superior performance metrics ensure continued market leadership and revenue growth in digital advertising markets.

Chapter Ten: Google vs Microsoft Consumer Hardware, Device Ecosystem Integration and Platform Control Mechanisms – The Physical Gateway to Digital Services

Google vs Microsoft consumer hardware market represents a critical competitive dimension where both corporations attempt to establish direct customer relationships, control user experience design and create ecosystem lock in mechanisms that reinforce competitive advantages across software and service offerings.

However the strategic approaches, product portfolios and market success demonstrate fundamentally different capabilities and priorities that influence long term competitive positioning in consumer technology markets.

Google’s consumer hardware strategy encompasses Pixel smartphones, Nest smart home devices, Chromebook partnerships and various experimental hardware products designed primarily to showcase Google’s software capabilities and artificial intelligence features rather than generate substantial hardware revenue or achieve market leadership in specific device categories.

The hardware portfolio serves as reference implementations for Android, Google Assistant and other Google services while providing data collection opportunities and ecosystem integration that reinforce Google’s core advertising and cloud service business models.

Microsoft’s consumer hardware approach focuses on premium computing devices through the Surface product line, gaming consoles through Xbox and various input devices designed to differentiate Microsoft’s software offerings and capture higher margin hardware revenue from professional and gaming market segments.

The hardware strategy emphasizes integration with Windows, Office and Xbox services while targeting specific user segments willing to pay premium prices for Microsoft optimized hardware experiences.

The smartphone market analysis reveals Google’s Pixel devices maintain minimal market share despite advanced computational photography, exclusive Android features and guaranteed software update support that demonstrate Google’s mobile technology capabilities.

Pixel smartphone sales totalled approximately 27 million units globally in 2023 representing less than 2% of global smartphone market share while generating limited revenue impact compared to Google’s licensing revenue from Android installations across other manufacturers’ devices.

Google’s smartphone strategy prioritizes technology demonstration and AI feature showcase over market share growth or revenue generation with Pixel devices serving as reference platforms for Android development and machine learning capability demonstration rather than mass market consumer products.

The limited commercial success reflects Google’s focus on software and service revenue rather than hardware business development while providing valuable user experience testing and AI algorithm training opportunities.

Microsoft’s withdrawal from smartphone hardware following the Windows Phone discontinuation eliminates direct participation in the mobile device market that represents the primary computing platform for billions of users globally.

The strategic exit creates dependency on third party hardware manufacturers and limits Microsoft’s ability to control mobile user experiences, collect mobile usage data and integrate mobile services with Microsoft’s software ecosystem compared to competitors with successful mobile hardware platforms.

The laptop and computing device comparison demonstrates Microsoft’s Surface product line success in premium computing market segments with Surface devices generating over $6 billion in annual revenue while achieving high customer satisfaction ratings and professional market penetration.

Surface Pro tablets, Surface Laptop computers and Surface Studio all in one systems provide differentiated computing experiences optimized for Windows and Office applications while commanding premium pricing through superior build quality and innovative form factors.

Google’s Chromebook strategy focuses on education market penetration and budget computing segments through partnerships with hardware manufacturers rather than direct hardware development and premium market positioning.

Chromebook devices running Chrome OS achieved significant education market adoption during remote learning periods but remain limited to specific use cases and price sensitive market segments without broader consumer or professional market penetration.

The gaming hardware analysis reveals Microsoft’s Xbox console platform as a successful consumer hardware business generating over $15 billion annually through console sales, game licensing, Xbox Game Pass subscriptions and gaming service revenue.

Xbox Series X and Series S consoles demonstrate technical performance competitive with Sony’s PlayStation while providing integration with Microsoft’s gaming services, cloud gaming and PC gaming ecosystem that creates comprehensive gaming platform experiences.

Google’s gaming hardware attempts including Stadia cloud gaming service and Stadia Controller resulted in complete market failure and product discontinuation within three years of launch, demonstrating Google’s inability to execute successful gaming hardware and service strategies despite substantial investment and technical capabilities.

The Stadia failure illustrates limitations in Google’s hardware development, market positioning and consumer product management capabilities compared to established gaming industry competitors.

The smart home and Internet of Things device analysis demonstrates Google’s Nest ecosystem success in smart home market penetration through thermostats, security cameras, doorbell systems and various connected home devices that integrate with Google Assistant voice control and provide comprehensive smart home automation capabilities.

Nest device sales and service subscriptions generate substantial recurring revenue while creating data collection opportunities and ecosystem lock in that reinforces Google’s consumer service offerings.

Microsoft’s smart home hardware presence remains minimal with limited Internet of Things device development and reliance on third party device integration through Azure IoT services rather than direct consumer hardware development.

The absence of consumer IoT hardware creates missed opportunities for direct consumer relationships, ecosystem integration and data collection that competitors achieve through comprehensive smart home device portfolios.

The wearable technology comparison reveals Google’s substantial advantages through Fitbit acquisition and Wear OS development that provide comprehensive fitness tracking, health monitoring and smartwatch capabilities across multiple device manufacturers and price points.

Google’s wearable technology portfolio includes fitness trackers, smartwatches and health monitoring devices that integrate with Google’s health services and provide continuous user engagement and data collection opportunities.

Microsoft’s wearable technology development remains limited to discontinued Microsoft Band fitness tracking devices and limited mixed reality hardware through HoloLens enterprise applications, creating gaps in consumer wearable market participation and personal data collection compared to competitors with successful wearable device portfolios and health service integration.

The competitive analysis of consumer hardware reveals Google’s superior positioning in smartphone reference implementation, smart home ecosystem development and wearable technology integration while Microsoft demonstrates advantages in premium computing devices and gaming hardware that generate substantial revenue and reinforce enterprise software positioning.

However both companies face limitations in achieving mass market hardware adoption and ecosystem control compared to dedicated hardware manufacturers with superior manufacturing capabilities and market positioning expertise.

Chapter Eleven: Google vs Microsoft Privacy, Security, Data Protection and Regulatory Compliance Infrastructure – The Foundation of Digital Trust

Google vs Microsoft privacy and security practices implemented by both corporations represent critical competitive factors that influence consumer trust, regulatory compliance costs, enterprise adoption decisions and long term sustainability in markets with increasing privacy regulation and cybersecurity threat environments.